The client had a set of data visualization dashboards that tracked asset performance data in real time. However, these were not easily accessible and involved some effort before stakeholders could extract relevant insights.

The solution was to build enterprise chatbots that could deliver the same insights without taking up too much time or effort on the part of the client stakeholders. These bots worked on an “asked and answered” approach where the client could simply ask a query and the bot would analyze all necessary data to give a clear answer.

Solving with AWS

The chatbots were made available as web and iOS applications, and built entirely using AWS products. This was primarily because the client was already using AWS Cloud for hosting services and it made sense to build new solutions within the same ecosystem.

The Solution Architecture

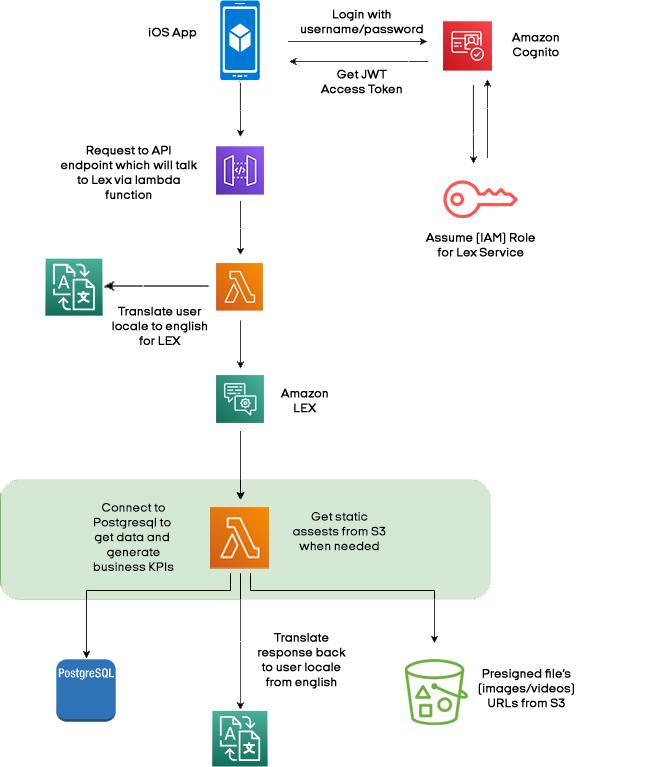

Here’s how the complete chatbot workflow was designed to operate:

- The user query input in the chabot hit the API Gateway

- This is passed onto AWS Lambda which identifies the location where the query originates, to identify the language

- AWS Translate converts the query to English, and passes it on to Amazon Lex

- Lex understands the user query and identifies the intent or the data that the user wants

- This is passed onto AWS Lambda which queries the PostgreSQL database to find the right answer

- The final response is again translated back to the original language by AWS Translate

- This is passed onto Lex which finally delivers it to the user on the app

AWS Solutions at Play

Amazon Lex

Amazon Lex is a conversational interface framework that’s used to design a chatbot’s understanding of natural questions asked and ability to carry forward a conversation. Its deep learning functionalities is what enables chatbots to identify the intent behind a particular question, understand the context, and give back an appropriate response.

The basic elements involved in designing the flow of interaction are utterances, intents, and slots. For this particular project, here’s how these elements were defined:

- Utterances: These were sample questions that a user could possibly ask the chatbots. This data, along with data on several variations of every question was fed into Lex. This training data helped Lex understand the range of possible natural language questions. Additionally, deep learning capabilities allowed Lex to extrapolate from the given variations of a question, and expand it’s vocabulary to understand newer variations of a question.

- Intent: Lex was trained to strip down each utterance to a basic intent. This is the exact information or piece of data that the used wants to know. This key intent is passed forward and processed to extract the relevant answer.

- Slots: These were values that help qualify a particular utterance or intent. They were necessary for questions that need additional data to be answered correctly.

For example, if the question is, “How many of a particular equipment are switched on?”, the chatbot needed to ask follow-up questions on the region, site, time frame etc. All of these-region, site, date range - can be defined as slot values for the “equipment switched on” intent.

Training data around slot values and possible permutation and combinations of slot values were also fed into Lex. This allowed the chatbot to accurately qualify questions, and also spot new slot values when they occur.

Amazon Cognito

Amazon Cognito is a solution to securely manage user sign-up, sign-in and access controls within an application. For the client, Srijan used Amazon Cognito and AWS Identity and Access Manager (IAM) for creating users, storing user data, creating user groups and access control based on authorization.

AWS Lambda

AWS Lambda is a serverless computing platform that can run code in response to event triggers. It also automatically manages all the resources required to manage and scale your code.

For this project, the user intent and slot values gathered by Lex were the triggers for Lambda. Based on these, Lambda would activate to generate an appropriate answer. The responses can be:

Static: Simple introductory messages or questions, which can be answered without querying the database.

Business-logic based: All questions around asset performance are answered by querying the database, extracting the answer, re-formatting it into a user-understandable format, and passing it to the user via Lex.

For the client, all asset performance and associated data was stored in PostgreSQL. The business logic defined atop Lambda governed how the raw data from the database is computed and interpreted. And that forms the basis of how Lambda generates an answer that’s relevant to the user’s question, rather than give just bare data points.

For example, for a particular intent titled “equipment performance”, the business logic is to define three KPI values - high performance, good enough, and switched off - with each value spanning a given range of a performance metric. To answer this query, Lambda will pull this metric for all equipment, and then sort them into the KPIs as per the defined business logic. And that’s the answer that the user receives: X machines are high performance, Y are good enough and Z are switched off.

The business logic for all intents was defined in close consultation with the client, depending on their operational needs.

AWS Translate

AWS Translate is a deep-learning powered neural translation service. Given that the client’s equipment and stakeholders were spread across different geographies, Translate was leveraged to make multilingual operation possible.

Translate worked with AWS Lambda to identify the geography where the utterance originated and lock on the utterance language. This was then translated into English before being passed on to Amazon Lex.

Similarly, once the answer was generated by Lambda in English, it was passed through AWS Translate to convert it back to the original language.

Amazon S3

Amazon S3 is a secure and scalable object storage service. Static equipment images and other graphs and charts generated to accompany chatbot answers were stored on Amazon S3. It was set up to communicate only with Amazon Lambda and not accessible by other applications or by direct queries.

In cases where the user wanted to view a particular equipment model, or other visual data, AWS Lambda pulled up the relevant images from S3 as a temporary URL. This was then passed onto Lex which displayed the image to the user.

Business Benefits

- The bots ecosystem helped the client close (upsell) business worth of 90 million USD

- One of the bots increased the user retention of the beta user group from 8% to 42%, which allowed the sales team to upsell more of the products.

- The bot applications allowed the analytics team to automate a lot of tasks happening over spreadsheets.

Srijan is an Advanced Consulting Partner for Amazon Web Services (AWS). It is currently working with enterprises across media, travel, retail, technology and telecom to drive their digital transformation, leveraging a host of AWS solutions. Srijan's expert team of certified AWS engineers are working with machine learning and natural language understanding to create interesting enterprise chatbots for diverse industry use cases.

Looking to develop an effective enterprise bot ecosystem? Just drop us a line and our team will get in touch.