The ‘Real-Time’ Landscape

Real-time computing has great promise, especially when it is used as the foundation of key management processes. However, even a minor deviation from the performance metrics prevalent with real-time computing systems can result in major discrepancies in the process' output as the system starts swinging towards a near-real-time operation.

The key difference between a real-time and near-real-time process is the speed at which the data is processed resulting in a huge impact on critical functions, business continuity, and the operations’ performance metrics.

The key difference between a real-time and near-real-time process is the speed at which the data is processed. Though the difference between these processes could range from a few minutes, seconds or milliseconds, it has a huge impact on critical functions, business continuity, and the operations’ performance metrics.

Our team worked closely with a client on an API Modernisation engagement and discovered a major asymmetry in the monetization process that was supposed to function on real-time processing. The industry-opinion on real-time systems largely tags them as difficult to achieve and maintain. This post explains how our team engineered an accessible and yet reliable architecture using external counter services to attain accurate and consistent real-time processing.

‘Real-Time’ API Monetization

Business Context

The API Monetization Platform under consideration had an option of choosing between Pay-Per-Use (PPU), Pay-Fixed-Rate (PFR), lease-based, subscription-based, and dynamic pricing mechanisms, which are common in the API-using cloud services vertical. The platform adopted the PPU pricing mechanism as it is one of the most accurate models that charge based on API product offerings and consumption.

Problem Discovery

Since the platform itself was quite comprehensive, the performance system was clocking over 2000 transactions per second (TPS). This is where the real-time computation challenge was first discovered. The entire invoicing, billing and charging system depended on accurate analysis of the large set of frequently updated data in real-time.

A closer examination showed that near-real-time processing was adding to the latency in capturing resource consumption, resulting in inflated charges for developers.

We found that there was a major discrepancy. Since they had a dependency on the real-time computing system, the prepaid wallets were running into negative balances while the post-paid ones were breaching the credit limits.

A closer examination showed that the system was running on a near-real-time processing basis, instead of the earlier made assertions. This was adding to the latency in capturing resource consumption and hence resulting in inflated charges for developers.

This created an asymmetry between actual resource consumption and billed consumption, which could result in:

- Increased Churn Rate: The prepaid developers might simply avoid using the wallet, leading to a severe loss of future revenue streams.

- Compliance Risk: The post-paid developers would demand scrutiny of all the charges. If they see intent in the inflated charges, they might increase a compliance risk by taking the matter across dispute resolution systems.

- Damaged Brand Equity: The developer community is active in online forums. Overcharging issues can hence cause severe damage to the platform’s brand equity.

Potential Solution Mapping

Some of the solutions considered to fix the real-time processing of revenue calculations are described here:

- Building a New Monetization Solution

Pros Cons - The firm can build a more sophisticated solution that encompasses key developer needs like paired wallets, expiring credits, etc.

- Shifting to a new monetization will require lead time that would not address the immediate concerns.

- The firm might have to allocate significant resources in developing, testing, and deploying the new solution.

- Using Alternative Monetization Solution

Pros Cons - The firm can evaluate more effective and efficient revenue models like subscriptions and percentage of resource consumption charges.

- The firm will have to shift its entire charging basis and the revenue model.

- Other operational processes will get affected as the firm’s resources will be based on each unit of usage.

- If this results in skewed or high charges for developers, the firm may experience lower profits or an increased churn rate.

- The lead-time for shifting to a new solution will keep the existing challenges unsolved for a considerable period.

- Engineering an External Counter Service

Pros Cons - The firm can use an external add-on counter service that pairs with the API Management platform for monetization.

- It can be quickly developed with the help of a technology partner within a considerably shorter timeframe.

- The new solution would not require infrastructure or human capital investments and will immediately address the pressing issues.

- Counter service should be in the same region to reduce network latency.

- There might be a need to fix the lag in updating the balance at multiple places.

Solution Engineering

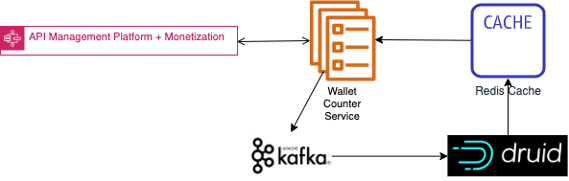

Since it promised overall efficiency, the external counter-service-driven solution was the preferred choice. To attain auto-scaling, it was to be run either with Docker or with Kubernetes. Here are the two technical solutions considered:

- Apache Kafka + Druid

The first solution was structured on Apache Kafka and Apache Druid. The idea was to build a MicroService that would connect Kafka and Druid across a common Virtual Private Cloud (VPC) Network, which can auto-scale based on the load. In terms of allocations, the MicroService would provide the deduction & load-up logic, Kafka would handle the incoming data stream whereas Druid would carry the wallet transactions.

The wallet balances were to be reflected in the API layer using Redis. This system was designed to show accurate developer wallet balances in real-time even on high TPS.

Pros Cons - The Solution would provide quick analytics across the functions and provide the analytics team with ample visibility.

- The configuration could support data pipeline integrations and provide reception to ad-hoc real-time & historical data queries, with high concurrency.

- It would be suitable for deduction queries as they can now get processed faster.

- It would reasonably control the ripple effect of near-real-time synchronization of the monetization system on top of everything.

- Since Kafka and Druid systems were being synchronized with the larger API Monetization platform, it may require maintenance costs later in the project's lifecycle.

- The firm might need a Managed Kafka on PROD later in the process.

- Apache Kafka + KSQL Counter Service

The second solution focused on a more distributed, scalable, consistent, and yet real-time Solution – Apache Kafka and ksqlDB. This solution was based largely on the event streaming platform engineered primarily for processing data streams. It is known across the industry for its continuous computation capabilities across unrestrained event streams.

The solution engineering process was closer to the earlier one, with Apache Kafka and ksqlDB deployed across the same VPC Network. Like the other alternative, the MicroService was responsible as the carrier of deduction & load-up logic. Kafka would manage income data streams, and ksqlDB would be the holder for transactions. At the same time, the accurate charges would get reflected in the API layer using Redis.

Pros Cons - The solution had auto-scaling capabilities.

- The proposed solution would act as a considerable buffer against near-real-time monetization.

- The solution would provide accurate charges in developer wallets in real-time with TPS processes.

- The firm can expect shorter processing times with this solution.

- The firm would have to bear relatively higher maintenance overheads for Kafka and ksqlDB along with the necessary investment for infrastructure.

- If the API gateway is in another network or region, the network will face some latencies, affecting the accuracy of the real-time process.

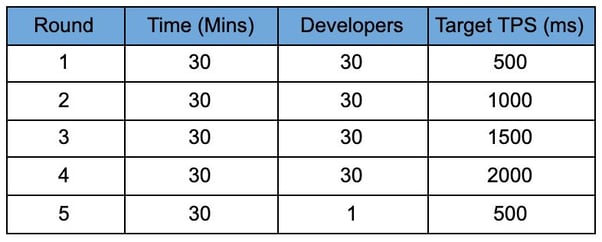

Performance Analysis to Identify the Right Solution

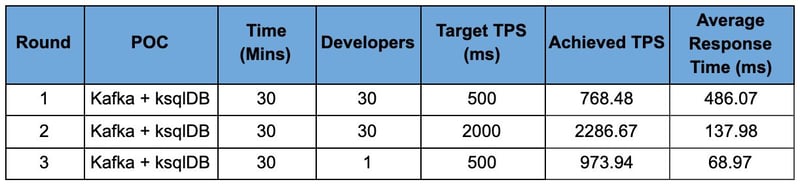

After validating the functional flow at the POC level we came up with the following metric as a benchmark to ensure that the solution can scale independently to ~2000 TPS:

Here are test results for:

Here are test results for:

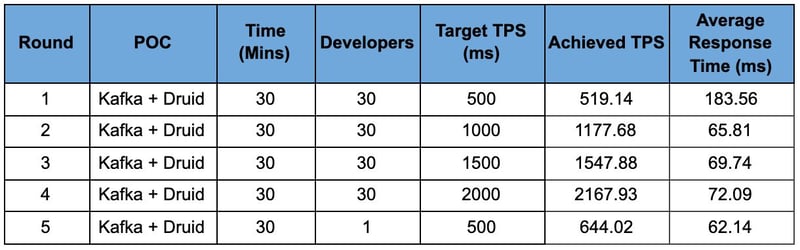

Apache Kafka + Apache Druid POC

Apache Kafka + ksqlDB POC

Performance and Business Impact Parameters

- Latency: This metric measured the possible latency after the potential solution was deployed. The second solution (Kafka & ksqlDB) could experience some latency if the API gateway was in a different network and region.

- Operational Efficiency: With auto-scaling, both the solutions had adaptive resource-consumption mechanisms that could scale up and down as service queries were found to be increasing or decreasing.

- Potential Maintenance & Infrastructure Overheads: Both the solutions would need a managed Kafka on PROD later in the process. However, Kafka & ksqlDB might require higher overheads as well as some investments in the necessary infrastructure.

Based entirely on the test results, both the solutions had promising and reliable real-time processing capabilities.

Implementation Recommendations

While the solutions were meeting the needs, the API Monetization Platform was asked by the solution engineering team to make some adjustments to avoid an identical issue post-implementation:

- Homogenous Location Between the API Monetization Platform and the Counter Service

The solutions were designed with the assumption that the API Monetization Platform and the counter service would be a part of the same region. This would bring a high degree of control in terms of the possible latency. - Deliberate Update Log Management Post Syncing

While the solutions were good enough to absorb the near-real-time latency, they were still dependent on accurate transaction logs maintained across the system. - Uniform data storage for all redundant data between the API Monetization Platform and the Counter-Service database

The counter-service had now become a critical part of the network and had its database. Thus, it became essential for the firm to manage uniformity across all the shared data between the API Monetization Platform and the solution database. - Structuring a Kafka-based refund policy

In Kafka solution, if there is a failure at Kafka consumer, make sure that the developer is refunded with the deducted amount. This helps in reconciliation and would provide a safety net if the firm witnesses any discrepancies in the developer wallet balances attributable to systemic issues.

Use-Cases for the Solution

Though the solution was engineered for the API Monetization Platform, it has clear applications across a wider range of verticals:

- Telecom Industry APIs

Telecom companies that provide enterprise solutions can streamline their invoicing, market sizing, or asset valuation processes with the same solution. With the solution in place, the firms can focus on capturing the smallest unit of data consumption and transmission across their services.

Instead of offering blanket pricing across services, the telecom companies can provide real-time data on consumption as well as services like SMS with respect to subscriber information and provide accurate billing information. The solutions can also shorten the lead-time for third-party mobile top-ups. - Public Connectivity Platforms

Connectivity solutions like public WiFi systems often tend to have either time-based charges or data-consumption-based segments for charging the users. Across these touchpoints, which are commonly available at airports, malls, and other public places, the infrastructure operating companies can provide more tailored and usage-based WiFi consumption charges. Users can get real-time information on their usage and corresponding charges, instead of overpaying for the entire timeframe or unused data. - Fintech

Virtual and digitally connected cards, wallets, virtual payment systems, and APIs can run automated, accurate, and quicker payment processing operations. With a more reliable and consistent real-time processing system, fintech products can provide instant trade settlements between buyers and sellers, without having to take credit risk with prolonged transactions.

Conclusion

To succeed in the API monetization landscape, there is a need to adjust, tweak and try-out multiple dry runs to arrive at the best option. With an external counter service approach, now we have established that the enterprises can technically implement real-time processing without affecting the existing setup. This method can be accomplished in public, private, hybrid, or multi-cloud environments, as an add-on or stand-alone solution.

Our Services

Customer Experience Management

- Content Management

- Marketing Automation

- Mobile Application Development

- Drupal Support and Maintanence

Enterprise Modernization, Platforms & Cloud

- Modernization Strategy

- API Management & Developer Portals

- Hybrid Cloud & Cloud Native Platforms

- Site Reliability Engineering